Tutorial · 2 min read

AI-Based Transcription in INTERACT: Automated Speech-to-Text with Speaker Recognition

Learn how to leverage AI-powered auto-transcription features in INTERACT using OpenAI's Whisper model for efficient speech-to-text conversion locally and GDPR-compliant.

Streamline your research workflow with INTERACT’s AI-powered transcription capabilities. This tutorial demonstrates how to use OpenAI’s Whisper language models for automated transcription of audio and video recordings, complete with speaker recognition features. Perfect for researchers and analysts who need efficient, accurate transcription solutions wihtout the need to upload any audio or video files.

What You’ll Learn

- Configure AI-based transcription settings using Whisper language models

- Set up speaker identification for multiple participants

- Generate and manage SRT subtitle files

- Customize transcription output formats and display options

- Process batch transcriptions for multiple recordings

- 0:02 Introduction to AI transcription

- 0:17 File preparation and requirements

- 0:46 Accessing auto-transcribe features

- 1:04 Language model selection

- 1:36 SRT file handling

- 1:59 Speaker identification setup

- 2:18 Export format options

- 2:33 File storage settings

- 3:01 Viewing transcription results

- 3:29 Batch transcription process

Tutorial Overview

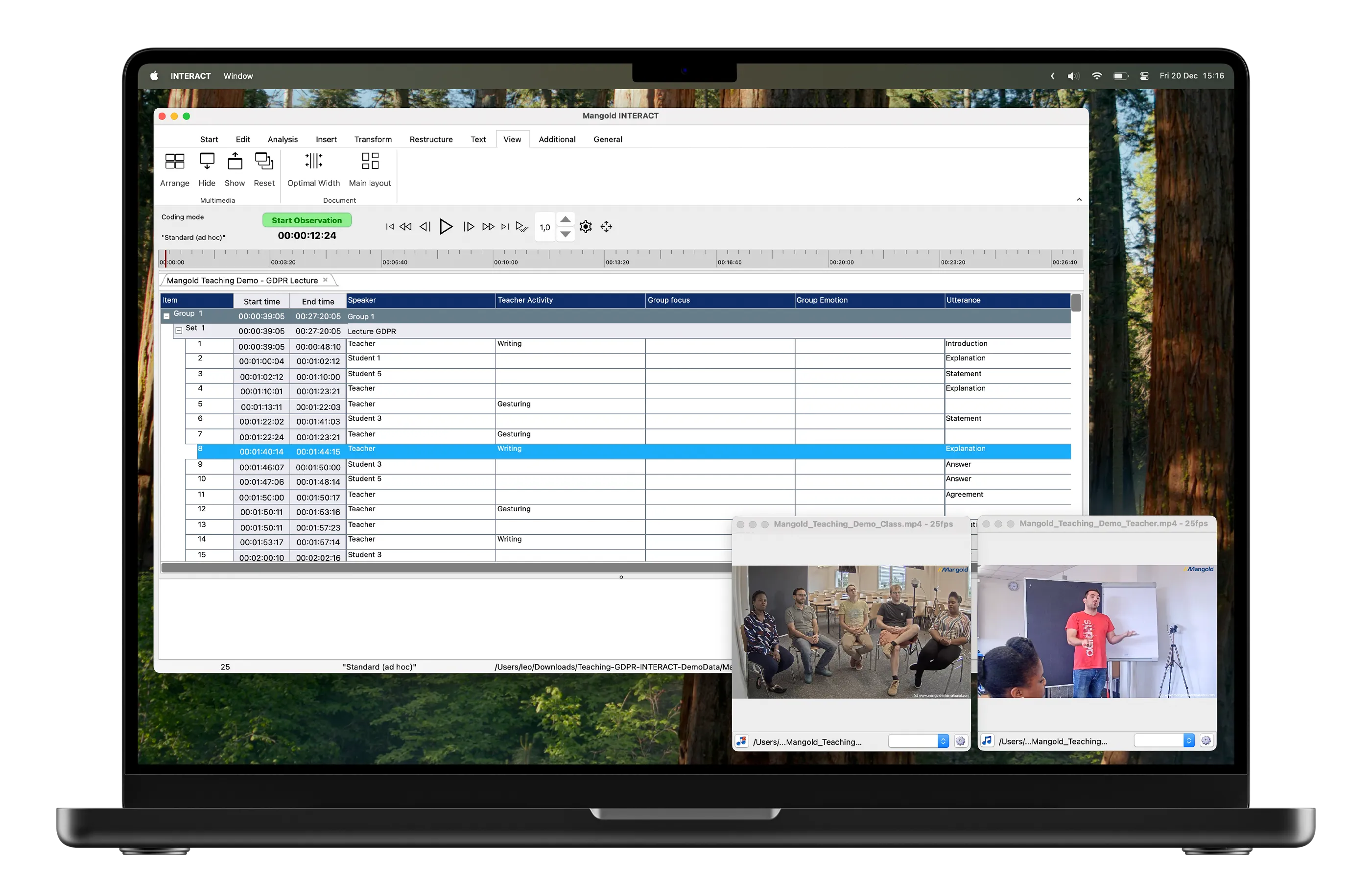

INTERACT’s AI-based transcription feature revolutionizes the way researchers handle audio and video content analysis. By utilizing OpenAI’s Whisper language models locally, users can automatically convert speech to text with remarkable accuracy and efficiency.

The process begins with a properly linked audio or video file in your INTERACT dataset. The system offers flexibility in the choice of language models, from the efficient ‘Base’ model to more comprehensive options for systems with powerful GPUs. This allows users to strike a balance between transcription accuracy and processing speed depending on their specific needs and available hardware.

A standout feature is the speaker identification capability, which can automatically distinguish between different voices in the recording. This is particularly useful for interview analysis, focus group research, or any scenario involving multiple participants with easily recognizable voices. The system also provides various export formats and viewing options, including word-level or sentence-level transcription events.

For larger research projects, INTERACT supports batch processing the transcription of multiple recordings, streamlining workflows for extensive datasets. The generated transcriptions can be stored as an INTERACT data file, making it easy to verify and analyze the results, as well as combining those transcriptions with behavioral observations or adding content-based codes to categorize the sentences.

INTERACT: One Software for Your Entire Research Workflow

From data collection to analysis—including GSEQ integration—INTERACT has you covered.